Yesterday, Google released Gemini 3. You’ve probably seen people highlighting benchmark scores, Elo ratings, technical comparisons to OpenAI, etc.

If you’re a developer or a data scientist, those numbers matter. But if you are a photographer, a designer, a filmmaker, or an entrepreneur, you likely have a different question: Does this actually change how I work?

I’ve spent quite a bit of time with the Gemini 2.5 Pro and after experimenting a bit with Gemini 3, I can confidently say that yes, it IS that good. And it definitely SHOULD change the way you work.

The”Deep Think” reasoning capabilities, the native image/audio/video/media integration, the new agentic workflows… This thing is a BEAST of a model, and it’s technically still in preview.

Here is a breakdown of what matters in this release and how you can leverage it.

Nano Banana 2 in Gemini 3 is shockingly good at style transfer (credit: @ai_artworkgen on X)

What’s new and special about Gemini 3?

Google released Gemini 3 on November 18, 2025. The release includes integration into Google Search (AI Mode), a new standalone app design, and specific developer tools like Google Antigravity.

Three specific updates are relevant to creative workflows:

-

Deep Think Mode: This feature allows the model to process complex problems before generating an answer. It tests multiple hypotheses and validates its own logic.

-

Native Multimodality: The model processes images (Nano Banana), text, audio, video, and code simultaneously. It understands audio directly, including tone, pitch, and prosody, rather than transcribing it to text first.

-

Agentic Workflows: Through the new “Google Antigravity” platform, the AI can execute multi-step tasks autonomously rather than just providing information.

- Day 1 Partnerships: Gemini 3 is in Figma Make, Replit, Weavy, Flora, and many more popular apps. Google was clearly cooking behind the scenes on this one!

Deep Think applies logic to creative structure

The marketing for Gemini 3 highlights its performance on math benchmarks – and they are indeed impressive. But for a creative professional, this “reasoning” capability applies more to project architecture.

Previous models often struggled with long-form coherence. You’ve probably seen this: if you asked an AI to outline a documentary, it might provide a generic structure. With Deep Think, the model can handle conflicting constraints. You can provide it with a transcript of 50 interviews, a runtime limit of 40 minutes, and a specific thematic goal. The model can then determine which clips fit the narrative arc while adhering to the time constraints.

This feature organizes chaos rather than generating ideas. The reasoning capability allows you to offload the structural sorting of raw materials, such as notes, footage logs, and drafts, so you can focus on editorial decisions.

Gemini 3 actually understands the 3D space of an image. Perhaps not 100% perfectly – but still incredibly impressive (and useful)

Visual and audio capabilities

The most significant update for creatives in Gemini 3 is native multimodality. In previous iterations, the AI essentially read a transcript of uploaded video or audio. Now, it processes the media directly.

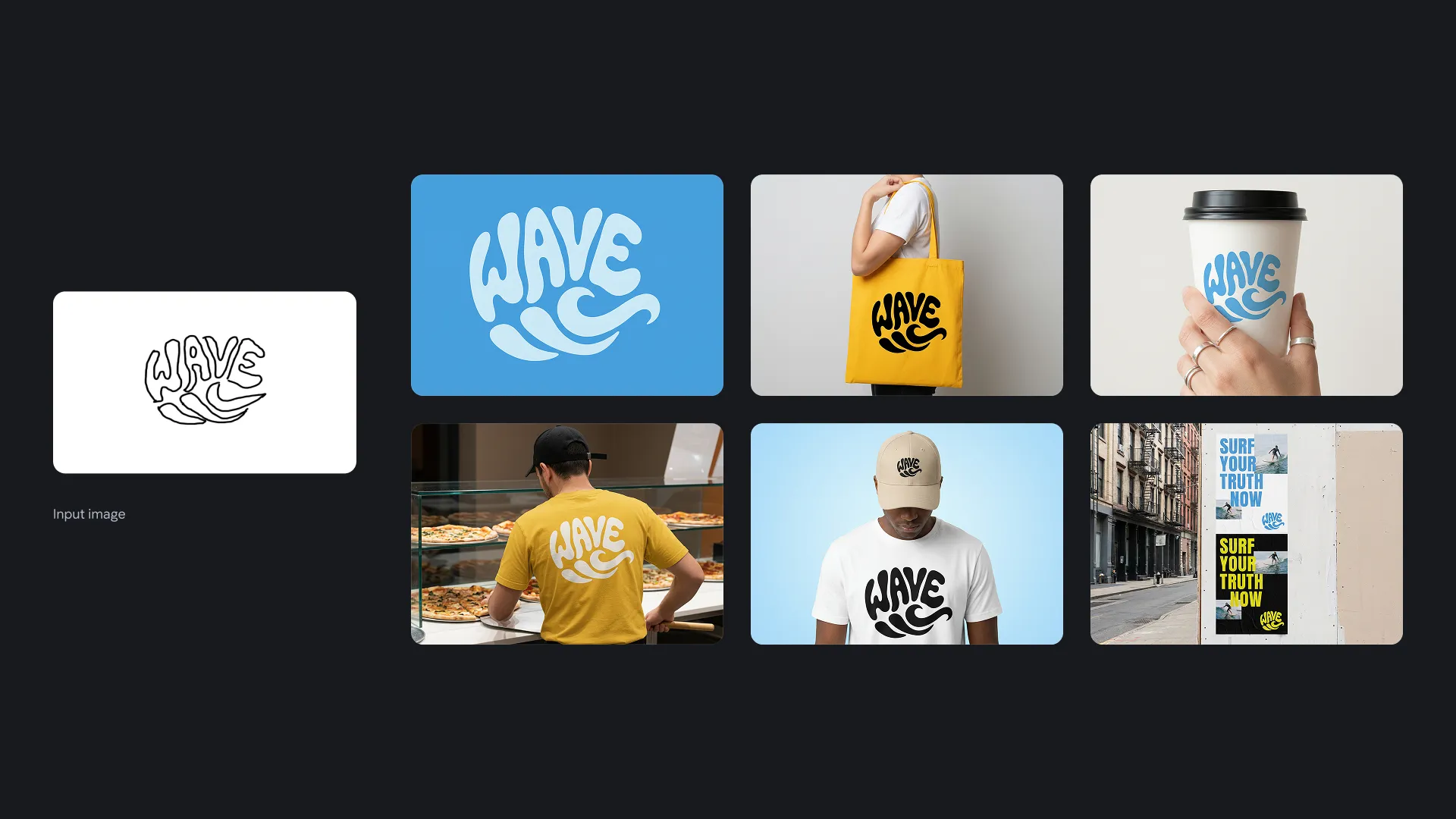

Instant mockups. And yes, it actually works this well. Literally just ask for what you want, and you can oneshot it 90% of the time

Images: Nano Banana Pro

Nano Banana (Gemini Flash 2.5 image generator) was already great, and the new update based on Gemini 3 is even better. It has better prompt adherence, understanding of the content and 3D space in the image, and also works in higher resolution (up to 4K). Great way to to create fast (but quality) mockups of packaging, pre-visualizing a photo shoot, etc. And WOW it’s good. Honestly, maybe the most powerful visual creation tool I’ve ever used – or at least since Photoshop got layers back in the 90s. Yes, Gemini 3 is that impressive!

And for those of you working in apps like Weavy, it has integrations for all those as well.

Video and Filmmaking: Veo 3 integration

The integration of the Veo 3 model means video generation is capable of higher fidelity outputs.

-

Consistency Control: You can use “Ingredients to Video” to upload reference images of a character or product. The AI generates new scenes while keeping those visual elements consistent.

-

Scene Extension: If a clip ends too abruptly, Gemini 3 can generate additional footage that matches the lighting, camera movement, and action of the original source.

-

Edit-Ready Assets: The model generates video with native audio. Dialogue and sound effects are synchronized to the movement.

Audio and Podcasting

Because Gemini 3 processes audio natively, it detects nuances that transcription misses. Podcasters can upload a raw mix and ask the model to identify moments where a guest sounds hesitant or nervous. The model identifies the vocal tone rather than just the words spoken.

The new “Audio Overview” features allow you to turn research notes into podcast-style audio discussions. It serves as a tool for both input and output.

Image to App

This update connects design and development. You can sketch a user interface on paper, photograph it, and Gemini 3 will write the code to make that sketch a functioning interactive website. This is often referred to as “Vibe Coding,” where you design the visual and the AI handles the syntax. For the technical folks, they also launched a Cursor-like IDE called Antigravity.

Agentic workflows reduce friction

The introduction of Google Antigravity and improved agents signals a move away from chatbots. A chatbot waits for a question, whereas an agent performs a task.

In a creative context, this automates administrative work. Instead of asking the AI how to export a file, you can authorize an agent to organize a hard drive, rename files based on metadata, or convert a batch of raw video files into proxies for editing.

Removing the friction of file management, scheduling, and formatting allows you to reclaim mental energy for production.

Replit’s Gemini partnership rolled out on day 1 as well

Creative taste remains the big variable

With Gemini 3, the technical barrier to entry has been lowered. The tools for organizing, coding, and generating high-fidelity assets are faster and more logical.

This makes personal taste your most valuable asset. When technical execution becomes trivial, value shifts to selection.

-

Curation over creation: The ability to generate 100 variations of a logo is not useful if you cannot identify the one that resonates emotionally.

-

Story over spectacle: High-resolution video generation is accessible to everyone, but compelling narratives remain rare.

-

Intent over output: The AI can build what you request, but it cannot determine why you should build it.

Give it a shot

If you haven’t used AI for anything more than prompting ChatGPT here and there, Gemini 3 is the perfect time to dig deeper:

-

Test “Deep Think” on archives: Upload a disorganized folder of notes or old blog posts and ask the model to find connecting themes.

-

Try a “Vibe Coding” project: Sketch a simple tool on paper, upload the photo, and ask Gemini to write the code for it.

-

Experiment with “Scene Extension”: Take a short video clip from your camera roll and ask Veo 3 to extend the narrative by another 5 seconds.

The tools are better. The mandate remains the same: Make work that matters.