AI image generation tools have been bubbling for a while, but they truly exploded into the mainstream when the “Studio Ghibli” filter trend went viral, powered by the release of ChatGPT-4. Since then, it’s been impossible to ignore the flood of new apps like Midjourney, ComfyUI, Higgsfield, etc – all capable of creating stunning, surreal, and sometimes bizarre images from a simple text prompt.

The latest name in this conversation is Nano Banana Pro (Google’s Gemini 3 Image), but it represents a slightly different, and for photographers, arguably more practical, evolution. While other tools focus on generating images from scratch, Nano Banana’s real power is its focus on editing. It’s built for a workflow where you start with an existing photo and refine it through a natural language conversation, making it less of a novelty “art button” and more of a skilled studio assistant.

Sound interesting? It is…

What is Nano Banana, aka Gemini 3 Pro Image?

“Nano Banana” is the internal codename for Google’s image model, officially known as Gemini 3 Pro Image. You can access it through the free Gemini app and Google’s AI Studio. Nano Banana can generate images from scratch (and does a very, very good job) but for photographers, the most interesting application is likely to be it’s insanely good editing capabilities.

The key difference lies in the core technology. Most generators like Midjourney or Stable Diffusion are diffusion models—highly specialized systems trained to do one thing: build an image from noise based on a text prompt. They are brilliant at aesthetics but don’t fundamentally understand *what* is in the images they create.

Nano Banana is different. It’s a native multimodal large language model (M-LLM). This means it was designed from the ground up to understand and reason across multiple types of data—text, images, audio, video—simultaneously. For a photographer, this translates into a monumental shift. You’re no longer just shouting commands into a text box and hoping for the best. You’re engaging in a dialogue with a tool that genuinely understands the content and context of the image you’ve given it. It doesn’t just see pixels; it sees a “shoe,” a “logo,” or a “reflection on the water.” This deep understanding is what allows for the precise, conversational editing that diffusion models struggle with, turning the entire creative process from a one-way street into a collaborative session.

Super easy, fun way to edit: Just make notes directly on the image and upload to Gemini

How Photographers Can Actually Use Nano Banana

This is where the theory meets the road. Abstract tech is useless until you can apply it to a real-world workflow. So, how can a professional photographer use a tool like this to save time, unlock new creative avenues, and deliver better work for clients?

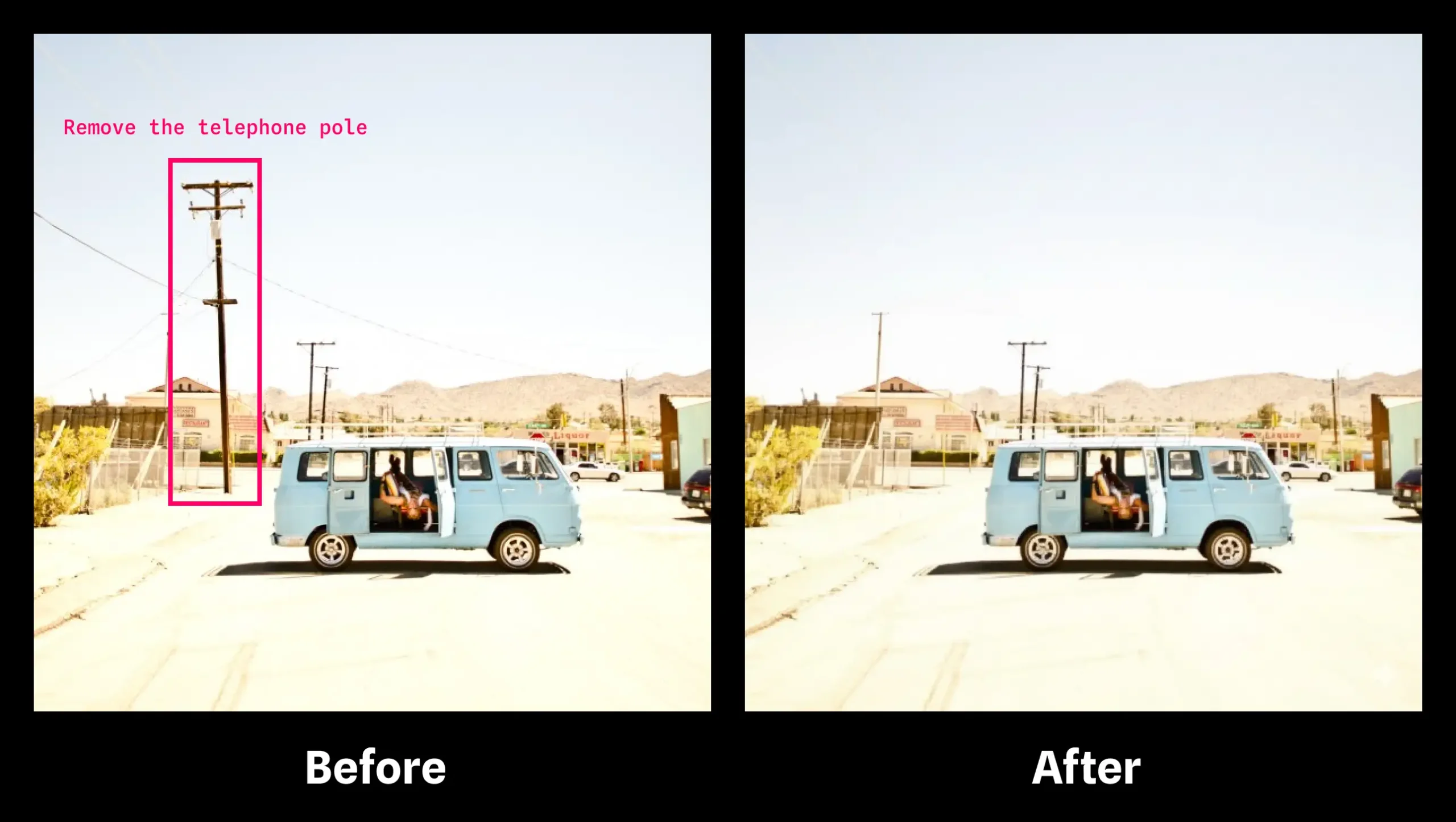

A Conversational Retouching Assistant

Imagine you’ve justfinished a product shoot. You have 50 images of a new sneaker, but the client decides the shade of green on the logo is slightly off. The old way involves a painstaking trip back into Photoshop or Lightroom for meticulous masking and color adjustments on every single shot.

The new way is to upload a reference image to Gemini and start a conversation:

- “See the logo on this shoe? Change its color to Pantone 15-0343.”

- “Now, apply that same change to these other 49 images, making sure to preserve the lighting and shadows.”

This is the power of context-aware editing. Because the model understands both the content of the image and your natural language instructions, it can perform complex, iterative edits that would traditionally require significant manual labor, letting you stay focused on the core creative work. This is especially powerful for maintaining consistency across a large set of images, a non-negotiable for commercial work.

Think about e-commerce shoots, lookbooks, or event photography where a specific person or object needs to look identical in every frame – hugely helpful.

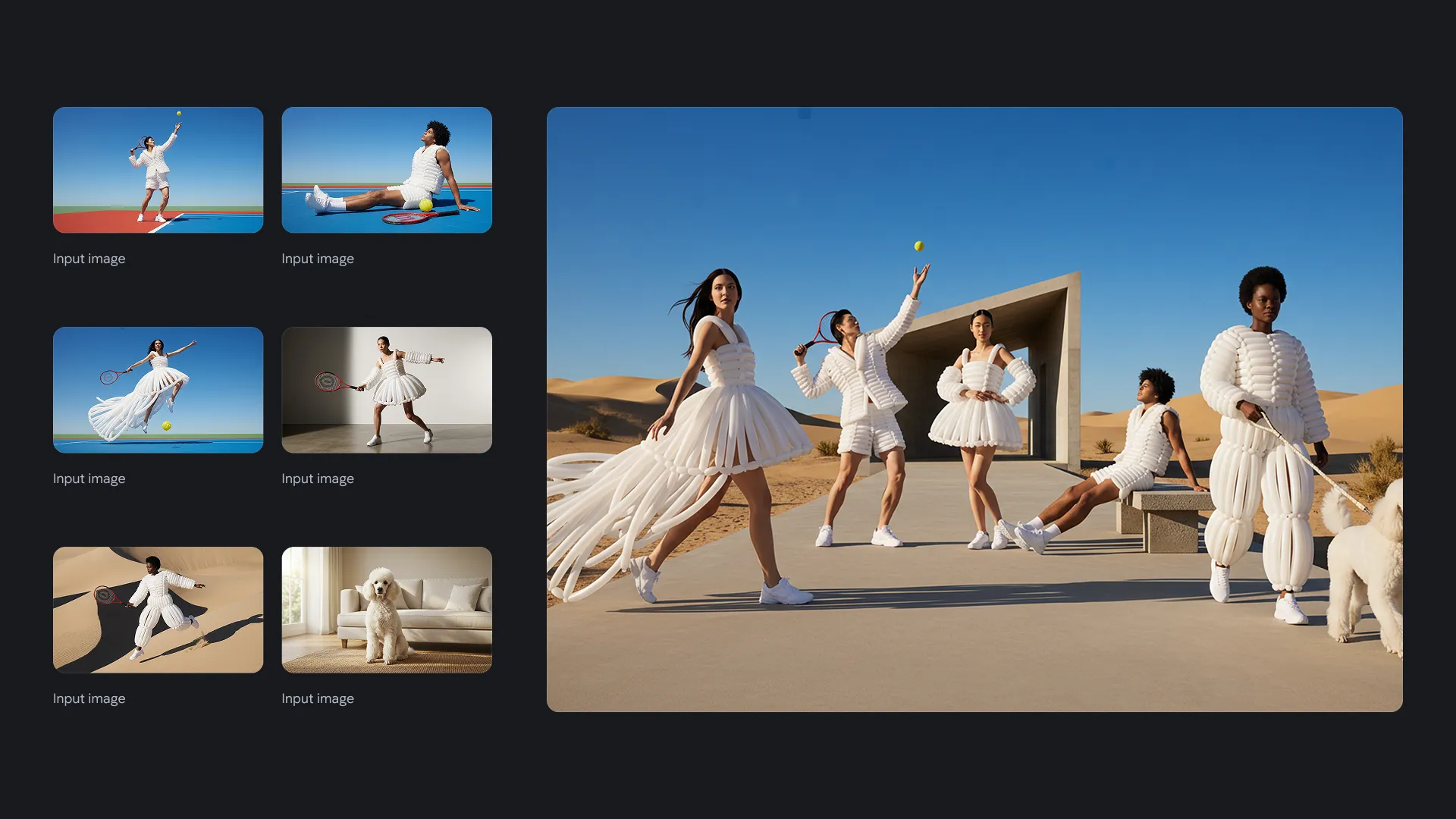

The Ultimate Location Scout and Mockup Tool

Client work is often about managing expectations and getting buy-in on a creative vision. Before you spend thousands on a location shoot, you need to know the concept works. This is where Nano Banana becomes an unparalleled pre-visualization engine.

Take a test portrait you shot in your studio. You can upload it and start riffing:

- User: “Put this person on a busy street in Tokyo at night, with lots of neon signs.”

- Gemini: (Generates the image)

- User: “I like it, but make the lighting more dramatic. Cast a strong key light from the left and add a bit of atmospheric haze.”

- Gemini: (Generates the revised image)

- User: “Okay, perfect. Now put the exact same person, with the same expression and outfit, in a minimalist art gallery with soft, diffused light.”

- etc…

Within minutes, you’ve created a series of high-fidelity mockups that communicate a clear creative direction to your client. This ability to preserve a subject’s likeness while radically changing the environment is a game-changer for mood boards, client pitches, and your own creative exploration.

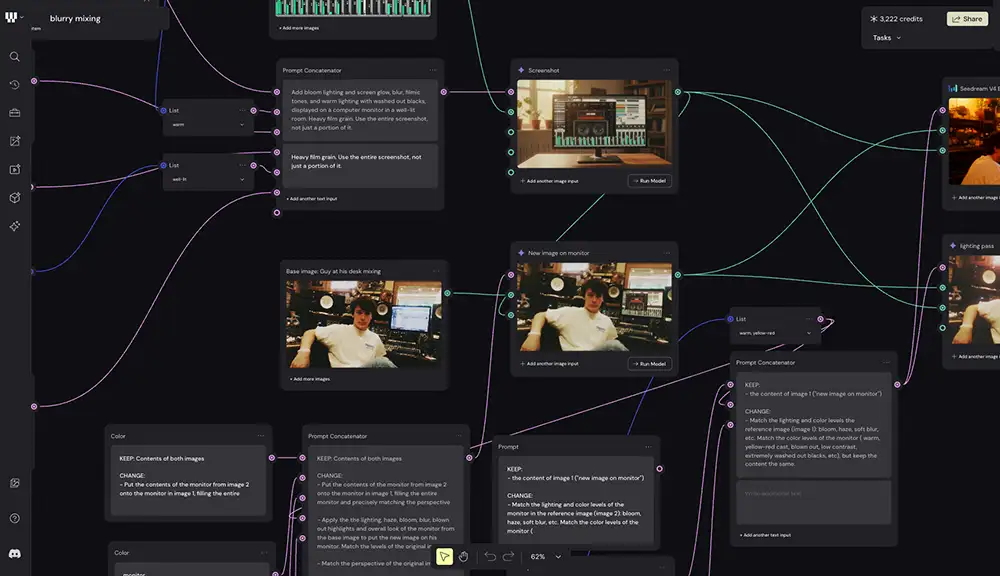

It’s especially great in a workflow app like Weavy to quickly iterate (and you’ll get higher resolution this way)

The Perfect Complement to Generative AI

Many photographers have experimented with diffusion models like Midjourney and found them to be incredible engines for aesthetics. They can create stunning, stylized, and beautiful images that would be impossible to shoot. Their weakness, however, is a lack of precise control because they don’t truly understand the *content* of the image they’re making. They’re brilliant at vibes, but terrible at details—think six-fingered hands, logos that are just gibberish, or a product that isn’t *quite* right.

This is where Nano Banana becomes the perfect professional complement. The new workflow is a two-step process:

- Generate for Aesthetics: Use a tool like Midjourney to create a selection of visually beautiful images that nail the mood, color palette, and style you’re after.

- Refine with Conversation: Take your favorite-but-flawed image from Midjourney and bring it into Nano Banana to fix the details. You can “talk” to the image and correct the elements the diffusion model got wonky. For example: “See the hand on the model? It has six fingers. Please correct it to show five.” Or, “The text on that sign is nonsense. Please remove it and leave the sign blank.”

This workflow gives you the best of both worlds: the raw aesthetic power of a diffusion generator and the precision, context-aware control of a multimodal editor. You’re no longer stuck with the beautiful-but-unusable image; you can now take it over the finish line.

Details on how to use Nano Banana in Photoshop

Integration Directly Into Your Workflow

Perhaps the most significant development for working pros is that these tools are no longer just standalone apps- they’re being integrated directly into the software you already use.

For example the latest update to Photoshop includes the ability to use third-party models like Nano Banana and Flux Kontext directly in Photoshop. This means you’ll be able to use Nano Banana’s conversational and generative power—for tasks like colorizing old photos, changing a subject’s pose, or removing complex objects like scaffolding—all from a panel inside your main editing environment, right alongside tools like Generative Fill. This removes the friction of exporting and importing, embedding this powerful assistant directly into your existing creative process.

Admittedly I haven’t truly put it through its paces but my initial testing was a little underwhelming vs using Nano Banana on its own or in Weavy, but I expect they’ll be making improvements.

Note that it doesn’t yet work with Affinity Studio, for anyone who’s considering the switch.

Limitations and Commercial Realities

NanoBanana is definitely a step change when it comes to AI image editors but we’re still early in this category and there are some limitations that professionals in particular will want to be aware of:

The watermark

If you’re using the free Gemini app to edit or generate images, Google will add a watermark to the corner. For any professional use case, this is a non-starter. Using the tool via its API or through third-party integrations is the path for unwatermarked, pro-level work (more details here).

Quality can degrade

While the initial edits are often stunning, the model can sometimes lose fidelity after multiple successive changes to the same image. Think of it as digital-generation loss. It excels at one or two major transformations, but endless tweaking can lead to soft or artifacted results.

Resolution

Nano Banana Pro currently operates at up to 4K. That’s big enough for many commercial uses, but may be an issue for projects that need super high res. You can try to run the output through an AI upscaler like Topaz or Magnific, but it’s an extra step and will never have the same fidelity as a natively high-resolution image from the start.

Commercial use and copyright

Google’s terms state that they don’t claim ownership of the content you create. However, they also make it clear that you are solely responsible for ensuring your work doesn’t infringe on existing copyrights. Under current US law, work generated purely by AI without significant human authorship is generally not eligible for copyright protection. I’m not your attorney so I won’t give you legal advice other than to proceed carefully and consult a lawyer as needed.

Generate new crops/aspect ratios in 4K, with a simple prompt? Hell yes!

The Bottom Line

So, is Nano Banana actually for you? Here’s a simple breakdown:

You might want to try Nano Banana if:

- You’re a working photographer who wants to accelerate tedious retouching and make consistent edits across many photos at once.

- You frequently create client mockups and need to quickly change backgrounds or environments while keeping your subject identical.

- You already use AI generators like Midjourney for aesthetics but need a reliable tool to fix the flawed details (like hands, text, or logos) they get wrong.

- You’re curious about the next wave of AI and want a “conversational assistant” that understands your images, rather than just a simple prompt-to-image generator.

Nano Banana may not be for you if:

- You need final, high-resolution, print-ready images directly from the tool. For anything above 4K you’ll need an upscaler.

- You like the free-flowing less structured workflow of something like MidJourney.

- You are not willing to use it as one part of a larger workflow that involves upscaling or further refinement in tools like Photoshop.

PS – For a deeper dive into the principles of freedom, creativity, and building a life you love, especially in this era of rapid change, the Seven Levers For Life is a free, 7-day email course designed to give you just that. Sign up for the free Seven Levers For Life email course.

PPS – Have you checked out Affinity Studio and Meta AI? Great free alternatives to Adobe Creative Cloud and Midjourney

More on Nano Banana: