The era of AI video as a blinking, surreal novelty is over. We’ve moved past the “wow factor” of generating abstract visuals. The market has matured, and the conversation is no longer about who can create pixels, but who can integrate those pixels into a professional workflow. For creative professionals—the directors, editors, VFX artists, and designers—the question has shifted from “what can it make?” to “how can I control it?”

This is now an industrial sector. The tools are no longer toys; they are platforms demanding a space in your pipeline alongside Adobe Premiere Pro, Blender, and Cinema 4D. The new metrics for success are not just resolution or realism, but controllability, consistency, and integration. In this landscape, two major players demand attention: Runway, with its focus on directorial control, and Google’s Veo 3, the enterprise-grade workhorse. Choosing between them isn’t about picking the “best” tool, but the right tool for your specific workflow, budget, and creative philosophy.

The Core Products Explained

Before diving into a feature-by-feature battle, it’s crucial to understand the fundamental difference in philosophy behind these two platforms. They are not direct competitors in the same way that two NLEs are; they are built for different users with different end goals.

Google Veo 3

Google’s Veo 3 is an enterprise infrastructure play, hosted on the Vertex AI platform. Think of it less as a standalone creative application and more as a raw, powerful engine designed for high-volume pipelines and developer integration. Its core strength is its ability to adhere strictly to complex prompts. For commercial projects where brand safety and specific visual requirements are non-negotiable, Veo 3 is engineered to deliver predictable, high-fidelity results. It’s built for scale and reliability, targeting corporate users and developers who need to generate thousands of clips through an API.

Runway Gen-4

Runway, with its latest Gen-4 model, continues to position itself as the tool for the hands-on creative director or “auteur.” While other platforms chase pure photorealism, Runway chases control. It is designed for artists who don’t just want to prompt a result but want to direct it. Gen-4 is built on the premise that AI video is not the final product but a component in a larger creative assembly. Its features are geared toward integration with existing 3D and compositing software, making it a bridge between generative AI and traditional VFX pipelines.

How Creative Professionals Can Use The Products

The real difference between these platforms emerges when you put them to work. A tool’s value isn’t in its spec sheet, but in how it performs under the pressure of a real-world creative project.

Workflow Integration and Pipeline

How a tool fits into your existing workflow is everything. A powerful generator that creates a workflow bottleneck is worse than a less powerful one that integrates seamlessly.

Runway: The 3D and VFX Bridge

Runway Gen-4’s single most important feature for professional creatives is the ability to export camera tracking data. This is a game-changer. When Runway generates a video, it is solving a 3D camera move to create that footage. Gen-4 allows you to export this camera solution as a JSON or FBX file.

Here’s the practical workflow:

- Generate a dynamic scene in Runway—for example, a drone shot flying through a futuristic city.

- Export the camera data from that generation.

- Import that FBX file into Blender, Cinema 4D, or After Effects.

- You now have a 3D camera in your scene that perfectly matches the movement of the AI-generated footage. You can now composite 3D objects—logos, spaceships, UI elements, text—and they will be perfectly locked into the Runway-generated plate. This “reverse pipeline,” where the AI dictates the camera move for your 3D software, solves one of the biggest headaches in compositing AI video: matching the unstable, often unpredictable perspective of the model.

This feature alone repositions Runway from a simple video generator to an essential tool for any hybrid workflow that combines AI footage with 3D elements.

Veo 3: The API-First Bottleneck

Veo 3 is designed to be accessed primarily through Google DeepMind’s VideoFX and the Vertex AI Studio. This is a developer-centric interface that requires a degree of technical comfort with the Google Cloud Platform (GCP) console. It’s less of a plug-and-play creative tool and more of an endpoint you call.

The most critical limitation for VFX artists is the lack of native alpha channel support. Veo 3 does not export videos with transparent backgrounds. If you want to generate an element like a wisp of smoke, a fire effect, or a character to composite over live-action footage, you are faced with a significant hurdle. You must generate the element against a solid background and then use third-party tools like Topaz Video AI or manual rotoscoping to create a matte. This adds time, complexity, and potential for ugly edge artifacts, making it a non-starter for serious VFX work. For context, this is a problem that open-source models like Alibaba’s Wan Alpha have already solved by generating transparency natively.

Controllability and Creative Direction

Your ability to influence the output is paramount. The “slot machine” approach of typing a prompt and hoping for the best is not a sustainable professional workflow.

Runway: Directorial Control

Beyond camera data, Gen-4 offers a suite of tools for granular-level direction:

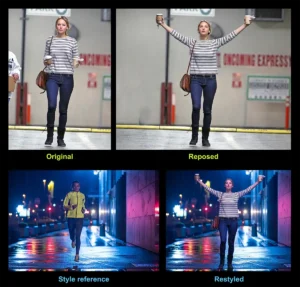

- Consistent Character: You can upload a reference image of a character and generate multiple clips where that character’s face, clothing, and general appearance remain stable across different scenes and lighting conditions. This is the first step toward creating narrative work with generative video.

- Act-One: This feature allows you to transfer the performance from a source video to your generated character. It’s a form of motion capture, enabling you to direct the movement and acting of your AI-generated talent.

- Motion Control Sliders: Runway’s interface gives you direct parameter sliders to control camera movements like pans, tilts, and zooms, offering a more deterministic way to craft a shot beyond just describing it in a prompt.

Veo 3: Prompt Adherence

Veo 3’s strength is its precision. It excels at following complex, multi-clause prompts without ignoring details or “hallucinating” unwanted elements. For a commercial director who needs to generate “a shot of a red SUV driving on a coastal road at sunset with lens flare,” Veo 3 is more likely to nail every single one of those requirements. This is a form of control, but it’s all front-loaded into the prompt. Once you hit “generate,” your influence is over. It’s a black box. This makes it highly valuable for commercial and corporate work where adherence to a specific brief is the primary metric of success.

Output, Quality, and Limitations

Both platforms produce high-quality 1080p footage, but their constraints reveal their intended use cases.

Veo 3: Short and Sharp

Veo 3 operates with rigid duration limits, with standard generations capped at 4, 6, or 8 seconds. While you can extend clips, the user consensus is that stitching them together frequently introduces visual jitter and breaks continuity. This makes Veo 3 ideal for generating short, specific B-roll clips but poorly suited for crafting longer narrative sequences. The model’s physics engine is impressive, accurately rendering complex object interactions, but this precision is confined to very short bursts.

Runway: Longer, with Purpose

Runway Gen-4 pushes the duration limit to 16 seconds. While still not long enough for a full scene, this provides significantly more breathing room for developing a shot. It allows for more complex camera moves and narrative beats to unfold within a single generation. While you still need to stitch multiple clips for long-form content, the 16-second window is a more practical and usable length for storytelling.

The Economics: The Real Cost of Creation

The pricing models are perhaps the most telling difference between the two platforms and will be the deciding factor for many freelancers and independent creators.

Veo 3: The Consumption Model and “Bill Shock”

Veo 3 uses a high-cost, consumption-based model, charging approximately $0.75 per second of generated video. This model is transparent and predictable for an enterprise client with a defined need (“we need 500 four-second clips for our app”). However, for a creative professional, it’s dangerous.

The creative process is iterative. It involves experimentation, failure, and refinement. In Veo 3’s metered environment, every one of those failed experiments costs you real money. There are reports of users experiencing “bill shock” after a session of creative exploration led to hundreds of dollars in unexpected charges. This pricing structure penalizes iteration and makes it prohibitively expensive for an independent creator to “find” the perfect shot.

Runway: The Subscription Model for Iteration

Runway operates on a familiar credit-based subscription model. For a flat monthly fee (plans vary), you get a set number of credits. This is a model built for creativity. It gives you a predictable budget and the freedom to experiment. You can run hundreds of generations to get a shot just right without worrying that each click is draining your bank account. A “failed” generation costs you a few credits, not a few dollars. This low-risk environment is essential for anyone whose process isn’t a straight line from prompt to final product. When compared to the broader market, Runway’s mid-tier subscription is a value play against the high per-second costs of Veo 3 and OpenAI’s Sora 2, while offering more control than cheaper, high-volume models like Kling.

The Bottom Line: Which Is For You?

The choice between Runway Gen-4 and Google Veo 3 is a strategic one. It’s about aligning a tool’s core philosophy with your professional needs.

Google Veo 3 Is For You If…

You work in a corporate, enterprise, or high-volume content environment. You need a reliable, scalable engine that delivers predictable, brand-safe results based on precise prompts. Your workflow is more about production than experimentation, and you have a predictable budget that can handle a consumption-based pricing model. You are comfortable working within a developer-focused ecosystem like Google Cloud and your final output doesn’t require complex compositing or VFX integration. Think of Veo 3 as a world-class render farm API—a tool for execution, not exploration.

Runway Gen-4 Is For You If…

You are a hands-on creative director, filmmaker, VFX artist, or 3D generalist. You value granular control and need your AI tools to integrate with your existing professional software stack. Your workflow involves compositing 3D elements, and the idea of exporting camera data directly to Blender or After Effects solves a major production headache for you. You believe AI video is a collaborative process between the artist and the machine, not just a prompt-and-receive transaction. The predictable cost of a subscription model gives you the freedom to iterate and experiment. Runway isn’t just a generator; it’s a director’s tool built for the modern hybrid artist.