The gold rush of AI video novelty is over. We’ve moved past the phase of surreal, morphing clips and unstable physics that filled social feeds. The market has matured, and the conversation is no longer about the simple act of generation. It’s about integration. It’s about workflow. The defining question for creative professionals closing out 2025 is not who can generate pixels, but who can integrate those pixels into professional non-linear editing (NLE) pipelines, 3D compositing environments, and real-world production schedules.

The battleground for creatives has shifted beyond resolution and runtime. The new metrics of success are controllability, consistency, and integration. This is the industrialization of generative video, and understanding the key players is no longer optional—it’s essential for staying relevant. At the top of this new landscape sits OpenAI’s Sora 2, a platform that represents the transition of generative video from a research preview to a commercial infrastructure layer.

What Exactly Is Sora 2?

Sora 2, released by OpenAI in September 2025, is a commercial-grade, AI-powered video generation model. But describing it merely as a “text-to-video” tool misses the point. Its core technical claim is to be a “general-purpose simulator of the physical world.” This isn’t just marketing speak; it points to a significant architectural leap over its predecessor. The model is engineered to understand and replicate complex object interactions and permanence, making it a powerful tool for narrative and commercial work.

Unlike the freemium models flooding the market, Sora 2 occupies the premium tier, targeting enterprise users and high-end creative professionals. Its most significant strategic move is its deep embedding within the Microsoft and Adobe ecosystems. By integrating directly into platforms like Microsoft 365 Copilot and Adobe Premiere Pro, OpenAI has positioned Sora 2 not as a standalone toy, but as a foundational utility layer for a massive user base. It’s designed to be a component in your existing toolkit, not a replacement for it.

How Can Creative Professionals Use It?

Sora 2’s value isn’t in generating abstract visuals; it’s in its application to specific, demanding creative workflows. The standout features are not just about visual fidelity but about solving long-standing production headaches.

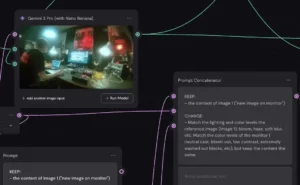

NLE Integration with Adobe Premiere Pro

This is the killer app for video editors. The direct integration of Sora 2 into Adobe Premiere Pro via the Firefly video model ecosystem fundamentally alters the post-production workflow. This isn’t about exporting a clip from one app and importing it into another. It’s about generative capability living directly inside your timeline.

- Generate B-Roll: You can create context-specific cutaways without ever leaving Premiere. Need a 5-second shot of a rainy street in Tokyo to cover an edit? You can prompt for it, generate it, and drop it onto V2, all within the same interface.

- Generative Extend: This feature is a lifesaver. It allows the model to add frames to the beginning or end of an existing clip. That perfect take that was cut just a few frames too short can now be seamlessly extended, saving you a costly reshoot or a frustrating edit.

- Object Removal and Addition: Using the generative priors of the model, you can perform contextual edits on scene elements. Need to remove a stray coffee cup from a table in a locked-off shot? You can mask and replace it with a clean plate generated by Sora 2, directly in your NLE.

Advanced Physics and Object Permanence

For anyone working in narrative, the “physics engine” capability of Sora 2 is a critical threshold. In previous generations of AI video, objects would frequently hallucinate—a character’s hands might have six fingers for a few frames, or a prop might vanish when briefly obscured. Sora 2’s improved object permanence means that a character walking behind a pillar will re-emerge as the same character, with their geometry and texture intact. It can accurately render complex scenarios that previously broke AI models, like a person solving a Rubik’s Cube or a basketball interacting realistically with a net. This reliability is the baseline requirement for using generative video in serious storytelling.

Character Consistency with “Cameos”

Character consistency has been the Achilles’ heel of AI video since its inception. Sora 2 tackles this with its “Cameos” feature. This allows you to train the model on a specific character’s likeness, whether from a photograph of a real person (with consent) or a generated persona. Once that “Cameo” is established, you can reliably insert that character into different scenes while retaining their core facial structure and physical details. This is the key to building multi-shot narratives where the talent needs to remain consistent from the wide shot to the close-up.

Audio Generation: A Useful First Pass

Sora 2 also introduces native audio generation, capable of synthesizing synchronized dialogue, sound effects, and ambient noise. The model is technically impressive in its ability to match sounds to visual events, like the clink of a glass as it’s set down. However, the fidelity often falls short of professional sound design standards, with a tendency toward robotic or uncanny tonality. For professional workflows, this audio serves best as a high-quality scratch track for timing and pacing, rather than a final deliverable.

The Cost of a Premium Tool

This power comes at a price. Sora 2 is positioned as a premium product, and its pricing reflects that. The API cost for 1080p resolution is approximately $0.50 per second. This means a single minute of footage costs around $30 in raw compute, not including the subscription fees for ChatGPT Pro or Microsoft Copilot. This structure makes it a tool for high-budget productions or enterprise environments where the cost is easily absorbed. Furthermore, the model is heavily guardrailed with C2PA watermarking and strict content moderation, which, while necessary, can sometimes block legitimate creative prompts.

How Sora 2 Stacks Up: A Market Snapshot

No tool exists in a vacuum. To understand Sora 2’s place, you have to understand the ecosystem it competes in. The market has bifurcated, with different platforms specializing in solving different creative problems.

Google Veo 3: The Enterprise Workhorse

Google’s Veo 3, hosted on Vertex AI, is an enterprise-first platform. Its key strength is prompt adherence—the ability to strictly follow complex, multi-clause instructions without error. This makes it ideal for commercial work with rigid brand guidelines. However, its rigid duration limits (capped at 8 seconds) and, most critically, its lack of native alpha channel support make it a poor choice for VFX artists who need to composite elements.

Kuaishou Kling: The Indie Disruptor

Kling, from Chinese tech firm Kuaishou, is the value leader. Its aggressive, subscription-based pricing makes it the workhorse for independent creators who need to iterate constantly without breaking the bank. Its defining feature is a “Professional Mode” that offers granular, deterministic camera control—letting you specify pans, tilts, and zooms with parameter sliders. While its visual fidelity is phenomenal for short clips, it can struggle with temporal consistency on longer takes.

Alibaba Wan: The Open-Source Revolution

Alibaba’s Wan is the most structurally disruptive model on the market. Its “Wan Alpha” variant is the only major model architected to generate video with a native alpha channel (transparency). For VFX artists, this is transformative. It allows for the creation of pixel-perfect smoke, fire, or semi-transparent elements that can be dropped directly into a Nuke or After Effects composite without keying. Being open-source (Apache 2.0 license), it can be run locally on consumer-grade hardware like an NVIDIA RTX 4090, shifting the cost from a recurring subscription to a one-time hardware investment.

Runway Gen-4: The Director’s Tool

Runway has always focused on control over pure realism, and Gen-4 doubles down on this. Its game-changing feature is the ability to export camera tracking data as JSON or FBX files. This allows a 3D artist to generate a scene in Runway, export the camera move, and bring it into Blender or Cinema 4D to perfectly track 3D objects into the AI-generated shot. This bridges the gap between AI generation and professional 3D pipelines.

ByteDance Seedance 1: The Narrative Engine

Seedance, from the parent company of TikTok, is a narrative machine. Its standout capability is “Native Multi-Shot Storytelling.” Instead of generating a single continuous shot, Seedance can generate a sequence of shots—like a wide establishing shot followed by a close-up—from a single prompt, maintaining character and lighting consistency across the cuts. This is built for the fast-paced, montage-heavy grammar of social media.

Midjourney: The Aesthetic Specialist

Midjourney remains the master of the still image. Its video capability is an extension of this, functioning as an Image-to-Video (I2V) tool. You generate a beautiful, texturally rich image with Midjourney’s superior aesthetic engine and then use the “Animate” function to impart limited motion. The result is often more artistic than a native video generation, but the motion itself is typically confined to simple loops or pans.

Is Sora 2 Right for You?

The era of the “one-size-fits-all” AI tool is over. The optimal workflow in 2025 is a hybrid one, using the best tool for each specific task.

Sora 2 is the right choice if you are a creative professional or editor deeply embedded in the Adobe and Microsoft ecosystems. Its value is not just in its powerful physics simulation and impressive visual fidelity, but in its seamless integration into the NLE you already use every day. If your work requires believable object permanence for narrative storytelling or the ability to generate B-roll without breaking your workflow, Sora 2 is a best-in-class solution.

However, you must have the budget to support it. Its premium, consumption-based pricing places it out of reach for many independent creators. If your primary focus is VFX compositing, the native alpha channel in Alibaba’s Wan is a much more direct solution. If you need precise, director-level camera control and 3D integration, Runway Gen-4 is built for you. And if you operate on a tight budget and need a tool for rapid iteration, Kuaishou’s Kling offers the most value for your money.

The future belongs not to the prompt engineer, but to the AI Orchestrator—the creative who can expertly weave these disparate, specialized models into a unified professional vision. Sora 2 is a powerful, essential instrument in that orchestra, but it’s not the only one you need to know how to play.