If you’re a photographer, designer, or filmmaker trying to navigate the current landscape, you know the noise is getting louder. The market is flooded with synthetic media, and the line between “captured” and “generated” is blurring faster than a bad Gaussian blur.

You’ve likely heard the term SynthID thrown around in tech headlines or deep-dive forums. Maybe you heard it’s a way to protect your work. Maybe you heard it’s a way to spot the fakes.

Here is the direct, cut-through-the-noise explanation of what SynthID actually is, how it works under the hood, and—critically—what it means for your career as a creative professional.

The Short Version: What It Is

SynthID is a digital watermarking technology developed by Google DeepMind. It embeds an imperceptible signal into AI-generated content—text, audio, images, and video—allowing software to identify it as artificial.

To be clear right up front: SynthID is not a tool you use to “protect” your human-made photos from being stolen. You cannot apply SynthID to your RAW files to stop AI scraping.

Instead, it is a system built into generative tools (like Google’s Gemini, Imagen, and Veo) to tag their output. Think of it as a manufacturer’s stamp on the chassis of a car, except it’s woven into the atomic structure of the metal itself.

How SynthID Works (The Technical Breakdown)

Forget the messy analogies. You’re a pro, so let’s talk about the actual mechanics. SynthID operates differently depending on the medium, but the goal is always the same: robustness. It needs to survive compression, color grading, and editing without destroying the quality of the file.

Images and Video: Pixel-Level Manipulation

For visual media, SynthID doesn’t just slap a metadata tag in the EXIF data that anyone can strip out with a screenshot (and it’s more than just the visual watermark).

It uses two deep learning models trained in tandem. The first model (the “embedder”) subtly adjusts the pixel values of the image during the generation process. These adjustments are mathematically significant to a computer but invisible to the human eye.

The second model (the “detector”) is trained to recognize these specific patterns. Because the watermark is distributed across the entire image at the pixel level, it is incredibly resilient. You can crop the image, rotate it, compress it to a murky JPEG, or throw a high-contrast filter on it, and the detector can usually still find the signal.

Audio: The Spectrogram Method

For audio generated by tools like Google’s Lyria model, SynthID works in the spectrogram—the visual representation of the frequency spectrum.

It embeds the watermark into the frequency domain of the audio track. This ensures that the watermark isn’t just a high-pitched frequency a human can’t hear (which could be cut by MP3 compression). instead, it’s woven into the core data of the sound wave itself. This allows the watermark to survive lossy compression (like MP3 or AAC), noise addition, and even speed variabilities.

Text: Tournament Sampling

Text is the hardest nut to crack because you can’t “hide” pixels in a plain text file. SynthID for text (integrated into Gemini) uses a statistical method called Tournament Sampling.

Large Language Models (LLMs) generate text by predicting the next token (word or character) in a sequence based on probability. SynthID intervenes in this prediction layer. It subtly biases the model’s choice of tokens using a pseudorandom pattern based on a secret key.

To a human reader, the text looks completely natural. To the detection software, the statistical pattern of word choices reveals a mathematical “signature” that proves the text was machine-generated.

The catch: Text watermarking is the most fragile. If you run the text through a translator or ask another AI to “rewrite this paragraph,” the statistical pattern is often broken.

Why This Matters To Your Career

You might be thinking, “If I can’t use this to watermark my own photos, why do I care?”

You care because trust is your new currency.

As we move forward, the creative industry is splitting into two distinct value propositions: Synthetic Efficiency and Human Authenticity. Understanding SynthID is the first step in mastering the second one.

1. Verification is the New Standard

Clients are already asking for proof of origin. We are seeing a shift where commercial contracts specifically mandate “human-created” deliverables for copyright and liability reasons.

While SynthID is currently limited to Google’s ecosystem (it detects content from Gemini, Imagen, etc.), it is part of a larger push for transparency. Tools like content credentials (C2PA) allow you to cryptographically sign your work, while SynthID allows the machines to sign their work.

Your workflow will soon involve checking incoming assets from freelancers or stock sites. If you’re an Art Director, you need to know if that “raw” footage is actually Veo output.

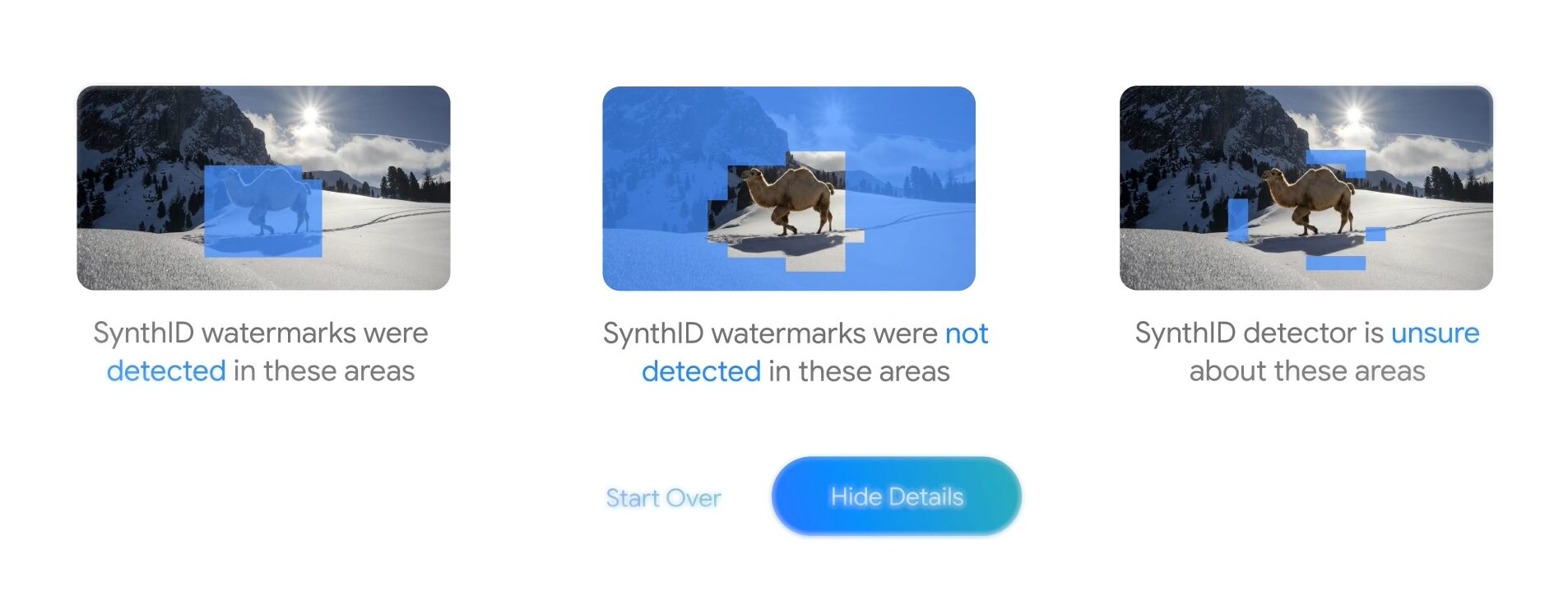

2. How to Check for SynthID

Right now, you can’t just download a “SynthID App” on the App Store, but the integration is rolling out across Google’s suite.

- Google Search & Photos: Look for the “About this image” feature. Google is rolling out updates where this panel will flag images containing the SynthID watermark.

- The Gemini Test: You can currently upload an image to Gemini and ask, “Is this AI generated?” If the image contains a SynthID watermark, Gemini is programmed to flag it.

- SynthID Detector Beta: Google has a web-based detector tool currently in beta for verified media partners. Keep your eye on this—it will eventually become a standard utility for all of us, much like a copyright reverse-search.

The “Human Premium” Strategy

Since you know SynthID is opt-in for the model creators (like Google), you can’t rely on it to catch everything. Midjourney or open-source Stable Diffusion models running on a private server don’t enforce this yet.

However, you can use this knowledge to position yourself.

Don’t fight the tech; validate your craft.

Start using C2PA (Content Credentials) aimed at creators. This is the counterpart to SynthID.

- SynthID says: “This was made by a robot.”

- C2PA says: “This was made by [Your Name] on [Date] using [Camera Model].”

Cameras from Leica (M11-P), Nikon (Z6 III), and Sony are beginning to support C2PA signatures natively. Using these tools gives your deeper commercial value. You aren’t just selling an image; you’re selling the liability-free certainty that a human made it.

The Bottom Line

SynthID is a technical milestone. It proves that we can invisibly tag generated media without ruining it. It is robust, clever, and necessary.

But for you? It’s a signal that the “Wild West” era of AI slop is ending. We are moving toward a labeled internet.

Your move is simple:

- Educate yourself on which tools use verification (Google Gemini/Imagen = Yes).

- Verify your incoming assets using the available tools to ensure you aren’t accidentally passing off AI work as human to a client.

- Double down on your human provenance. If the machines are watermarking their work, you better be signing yours.

Stay sharp. Keep creating.